Google Gemini 2.0 Flash Thinking AI Model With Advanced Reasoning Capabilities Launched

The model features increased inference time computation to bring advanced reasoning capabilities.

Photo Credit: Google

Google claims the AI model can solve complex reasoning and mathematics questions at a high speed

Google released a new artificial intelligence (AI) model in the Gemini 2.0 family on Thursday which is focused on advanced reasoning. Dubbed Gemini 2.0 Thinking, the new large language model (LLM) increases the inference time to allow the model to spend more time on a problem. The Mountain View-based tech giant claims that it can solve complex reasoning, mathematics, and coding tasks. Additionally, the LLM is said to perform tasks at a higher speed, despite the increased processing time.

Google Releases New Reasoning Focused AI Model

In a post on X (formerly known as Twitter), Jeff Dean, the Chief Scientist at Google DeepMind, introduced the Gemini 2.0 Flash Thinking AI model and highlighted that the LLM is “trained to use thoughts to strengthen its reasoning.” It is currently available in Google AI Studio, and developers can access it via the Gemini API.

![]()

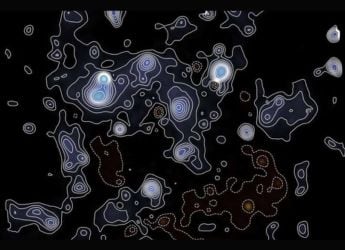

Gemini 2.0 Flash Thinking AI model

Gadgets 360 staff members were able to test the AI model and found that the advanced reasoning focused Gemini model solves complex questions that are too difficult for the 1.5 Flash model with ease. In our testing, we found the typical processing time to be between three to seven seconds, a significant improvement compared to OpenAI's o1 series which can take upwards of 10 seconds to process a query.

The Gemini 2.0 Flash Thinking also shows its thought process, where users can check how the AI model reached the result and the steps it took to get there. We found that the LLM was able to find the right solution eight out of 10 times. Since it is an experimental model, the mistakes are expected.

While Google did not reveal the details about the AI model's architecture, it highlighted its limitations in a developer-focused blog post. Currently, the Gemini 2.0 Flash Thinking has an input limit of 32,000 tokens. It can only accept text and images as inputs. It only supports text as output and has a limit of 8,000 tokens. Further, the API does not come with built-in tool usage such as Search or code execution.

Get your daily dose of tech news, reviews, and insights, in under 80 characters on Gadgets 360 Turbo. Connect with fellow tech lovers on our Forum. Follow us on X, Facebook, WhatsApp, Threads and Google News for instant updates. Catch all the action on our YouTube channel.

Related Stories

- Samsung Galaxy Unpacked 2025

- ChatGPT

- Redmi Note 14 Pro+

- iPhone 16

- Apple Vision Pro

- Oneplus 12

- OnePlus Nord CE 3 Lite 5G

- iPhone 13

- Xiaomi 14 Pro

- Oppo Find N3

- Tecno Spark Go (2023)

- Realme V30

- Best Phones Under 25000

- Samsung Galaxy S24 Series

- Cryptocurrency

- iQoo 12

- Samsung Galaxy S24 Ultra

- Giottus

- Samsung Galaxy Z Flip 5

- Apple 'Scary Fast'

- Housefull 5

- GoPro Hero 12 Black Review

- Invincible Season 2

- JioGlass

- HD Ready TV

- Laptop Under 50000

- Smartwatch Under 10000

- Latest Mobile Phones

- Compare Phones

- Realme Neo 8

- OPPO Reno 15 FS

- Red Magic 11 Air

- Honor Magic 8 RSR Porsche Design

- Honor Magic 8 Pro Air

- Infinix Note Edge

- Lava Blaze Duo 3

- Tecno Spark Go 3

- HP HyperX Omen 15

- Acer Chromebook 311 (2026)

- Lenovo Idea Tab Plus

- Realme Pad 3

- HMD Watch P1

- HMD Watch X1

- Haier H5E Series

- Acerpure Nitro Z Series 100-inch QLED TV

- Asus ROG Ally

- Nintendo Switch Lite

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAID5BN-INV)

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAIM5BN-INV)