Meta SAM 3 Open-Source AI Models Can Detect, Track and Construct 3D Models of Objects in Images

Meta’s SAM 3D models can detect and segment any object in an image and convert it into a 3D model.

Photo Credit: Meta

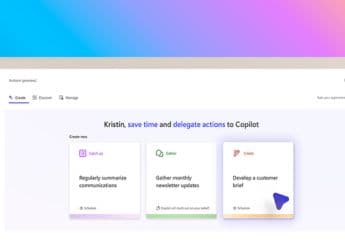

SAM 3 and SAM 3D are also powering Facebook Marketplace’s new View in Room feature

Meta has released its Segment Anything Model (SAM) 3 series of artificial intelligence (AI) models. With the latest generation of large language models (LLMs), the company is integrating some of the most requested features, such as the option to add text prompts and suggested prompts. These models can now detect and segment images and even create 3D scans of any object or human in them. In videos, these models are capable of segmentation and tracking of objects and humans. Like the previous generation, these are also open-source models that can be downloaded and run locally.

Meta Releases SAM 3 and SAM 3D AI Models

In two separate blog posts, the Menlo Park-based tech giant detailed the new AI models. There are three models in total. SAM 3 for image and video tracking and segmentation, SAM 3D Objects for detecting and creating 3D scans of objects in images, and SAM 3D Bodies, which can generate 3D scans of humans.

SAM 3 builds on earlier versions (SAM 1 and SAM 2) and adds the ability to segment based on text prompts, enabling natural language object detection in images and videos. Whereas previous models required clicking or visually identifying regions, SAM 3 accepts noun phrases like “red baseball cap” or “yellow school bus” and generates segmentation masks for all matching instances in a scene.

Meta highlights that this capability was highly requested by the open-source developer community. The model uses a unified architecture combining a perception encoder with a detector and tracker to support image and video workflows with minimal user input.

Meanwhile, SAM 3D enables 3D reconstruction from a single 2D image. The reconstruction handles occlusion, clutter and real-world complexity, outputting detailed meshes and textured geometry using a progressive training pipeline and a new 3D data engine.

Both models can be downloaded from Meta's GitHub listing, Hugging Face listing, or directly from the blog posts. Notably, these models are available under the SAM Licence, a custom, Meta-owned licence for specifically these models that allows both research-related and commercial usage.

Apart from making the models available to the open-source community, Meta has also launched the Segment Anything Playground. It is an online platform where users can test out these models without having to download them or run them locally. The platform can be accessed by anyone.

Meta is also integrating these models into its own platforms. Instagram's Edits app will soon be integrated with SAM 3, offering new effects that creators can apply to specific people or objects in their videos. This feature is also being added to Vibes on the Meta AI app and the website. On the other hand, SAM 3D is now powering Facebook Marketplace's new View in Room feature, which lets users visualise the style and fit of home decor items in their spaces before making a purchase.

Get your daily dose of tech news, reviews, and insights, in under 80 characters on Gadgets 360 Turbo. Connect with fellow tech lovers on our Forum. Follow us on X, Facebook, WhatsApp, Threads and Google News for instant updates. Catch all the action on our YouTube channel.

Related Stories

- Samsung Galaxy Unpacked 2025

- ChatGPT

- Redmi Note 14 Pro+

- iPhone 16

- Apple Vision Pro

- Oneplus 12

- OnePlus Nord CE 3 Lite 5G

- iPhone 13

- Xiaomi 14 Pro

- Oppo Find N3

- Tecno Spark Go (2023)

- Realme V30

- Best Phones Under 25000

- Samsung Galaxy S24 Series

- Cryptocurrency

- iQoo 12

- Samsung Galaxy S24 Ultra

- Giottus

- Samsung Galaxy Z Flip 5

- Apple 'Scary Fast'

- Housefull 5

- GoPro Hero 12 Black Review

- Invincible Season 2

- JioGlass

- HD Ready TV

- Laptop Under 50000

- Smartwatch Under 10000

- Latest Mobile Phones

- Compare Phones

- Lava Agni 4

- Wobble One

- OPPO Reno 15 Pro

- OPPO Reno 15

- Vivo Y500 Pro

- Realme GT 8 Pro Aston Martin F1 Limited Edition

- Huawei Mate 70 Air

- Moto G57

- Asus ProArt P16

- MacBook Pro 14-inch (M5, 2025)

- iQOO Pad 5e

- OPPO Pad 5

- Fastrack Revoltt FR2 Pro

- Fastrack Super

- Acerpure Nitro Z Series 100-inch QLED TV

- Samsung 43 Inch LED Ultra HD (4K) Smart TV (UA43UE81AFULXL)

- Asus ROG Ally

- Nintendo Switch Lite

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAID5BN-INV)

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAIM5BN-INV)